Screw AI that makes fake humans look real, learns every language on earth, and predicts crimes–AI will only really arrive when it is capable of generating an entire foodie porn Instagram channel by itself, by reading recipes that tell it how to make food in the real world.

Until now, text-to-image artificial intelligence produced synthetic images by looking at visually descriptive phrases like “this pink and yellow flower has a beautiful yellow center with many stamens” or, “a small bird with black eye, black head, and dark bill.” Microsoft has such technology that is so good, it could theoretically replace Google Images’ results with photorealistic fakes.

An important development in AI

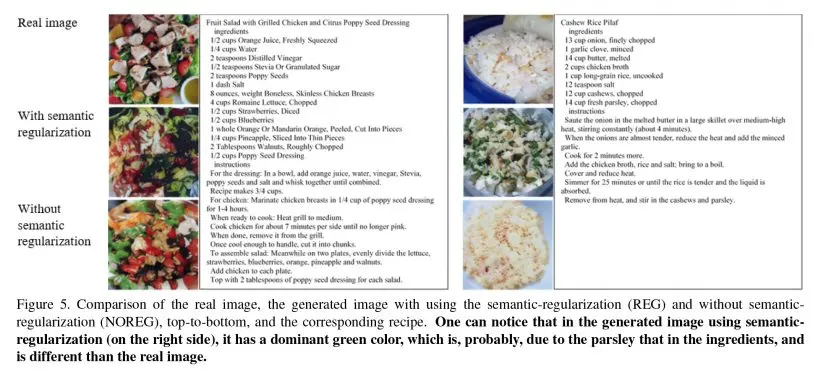

But the new AI algorithms developed by computer scientists Ori Bar El, Ori Licht, and Netanel Yosephian at Tel Aviv University don’t require you to visually describe anything: It can generate fake photos of food from text recipes that list the ingredients and the method of preparation but don’t contain any visual description of how the final plate looks. The AI wasn’t allowed to read the title of the recipe to generate the image, as it may be descriptive enough on its own. It exclusively used the ingredients and the instructions.

This is an important test for the power of AI, as the paper published on Cornell University’s Arxiv.org site suggests. It shows a capacity for abstraction that we’ve assumed computers don’t have.

How it works

The method relies on Stacked Generative Adversarial Networks (GANs). The first AI in this network analyzes a recipe, from ingredients to finishing touches, by converting text to numeric vectors. The scientists call this process “text embedding,” and it’s designed to understand what’s on the page by semantic mapping to other pieces of content.

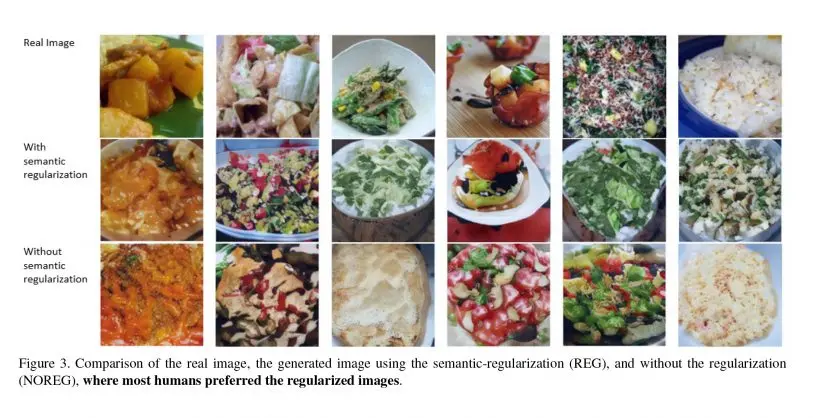

The second is a GAN that analyzes those vectors and compares them to other descriptions of more than 50,000 photos of food in the real world. After that learning process, the AI generates synthetic photos from new recipes. Granted, it does a better job at soups, rices, and enchiladas than other subjects like Sunday roasts or burgers, as the latter have more precisely articulated shapes. But the scientists explain this is only because they used low-resolution photos with poor illumination for the training.

In any case, this is a first in AI tech, and it will no doubt change the way we create and process imagery. Without even having to be visually descriptive, AI will eventually be able to analyze any text and create synthetic images from it. Brace yourself for the fake porn videos derived from body-part descriptions and lists of fetishes.

Recognize your brand’s excellence by applying to this year’s Brands That Matter Awards before the early-rate deadline, May 3.